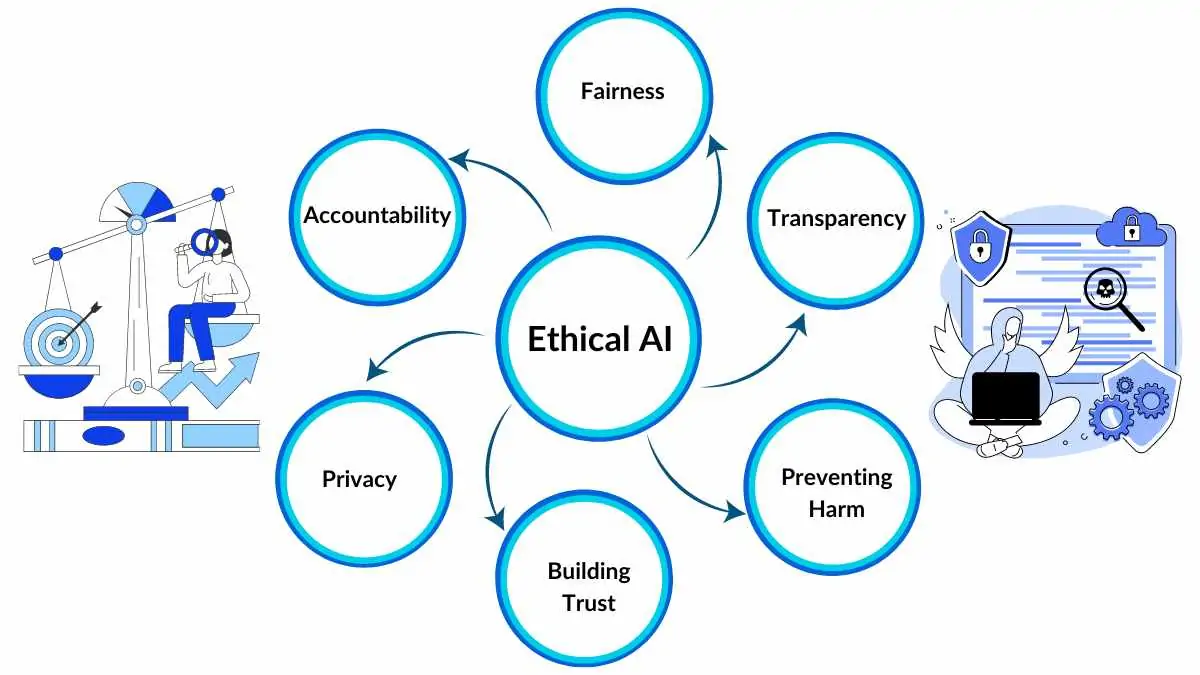

Artificial intelligence has become the new architect of decision-making, quietly shaping how we are hired, treated, and even judged. Yet, like an artist unaware of a smudge on the canvas, AI can reproduce imperfections from the data it learns. These imperfections—biases—may seem invisible but can deeply influence fairness. The task of a modern analyst, therefore, is not only to make systems intelligent but to ensure they remain just and ethical.

The Mirror Effect: When Data Reflects Our Flaws

Every dataset is a mirror—it doesn’t just capture facts but reflects the world’s imperfections. When algorithms train on biased data, they unknowingly amplify human prejudices. An AI model predicting job candidates might favour one gender, or a loan-approval model might underrepresent certain communities.

Analysts act as custodians of this reflection. Their role is to polish the mirror—to identify where distortion begins and to correct it before decisions are automated. Bias auditing isn’t a one-time activity; it’s a mindset of continuous vigilance.

For learners stepping into the analytical domain, enrolling in business analyst classes in Chennai offers practical exposure to real-world auditing frameworks. Such programs demonstrate how fairness, accountability, and transparency can be embedded into the lifecycle of AI development.

The Anatomy of Bias: Understanding Its Roots

Bias doesn’t originate from malicious intent—it often seeps in through oversight. Data collected from historical patterns naturally carries the societal imbalances of its time. When left unexamined, these patterns influence future predictions.

Analysts, therefore, must become ethical detectives. They investigate not just the numbers but the narratives behind them. From how data is sampled to how variables are encoded, every stage holds the potential for bias leakage.

In structured analytical learning, a business analyst classes in Chennai curriculum typically includes data validation and impact assessment techniques. Students learn to question assumptions, ensuring that models respect diversity rather than replicate inequality.

Auditing Frameworks: Tools for Fairness

Bias detection frameworks work like diagnostic tools in medicine—they reveal the health of an algorithm. Techniques such as fairness metrics, disparate impact analysis, and counterfactual testing are designed to measure bias quantitatively.

Frameworks like IBM’s AI Fairness 360 or Google’s What-If Tool empower analysts to simulate decisions, identify bias sources, and test mitigation strategies. These tools translate ethical principles into measurable outcomes—transforming fairness from an abstract ideal into an operational reality.

From Detection to Prevention: Building Ethical Pipelines

Bias detection is just the beginning; true responsibility lies in prevention. Analysts must build pipelines that promote fairness from the start—ensuring that data collection, feature engineering, and model evaluation align with ethical standards.

Regular audits, diverse data sampling, and transparency reports should be part of every AI system’s lifecycle. When these safeguards are integrated early, they prevent downstream ethical breaches that can damage credibility and trust.

Professionals who develop such pipelines are more than analysts—they are ethical architects, shaping systems that serve everyone equally.

The Human Element in Ethical AI

Even the most advanced bias-detection framework depends on human judgment. Machines can quantify disparities, but only people can interpret their implications responsibly. This intersection of technology and humanity defines modern data ethics.

An analyst’s responsibility extends beyond code—it involves empathy, awareness, and critical thinking. They must ask the uncomfortable questions: Who benefits from this model? Who could be harmed by it? These reflections transform analytics from computation into conscience.

Conclusion

Ethical AI isn’t just about compliance—it’s about cultivating trust. As algorithms increasingly make decisions that affect lives, ensuring fairness becomes not just a technical skill but a moral imperative.

For aspiring analysts, understanding bias and practising ethical vigilance are core to professional growth. With the right tools, mindset, and continuous learning, they can ensure AI remains a mirror that reflects our progress—not our prejudice.